If you’ve ever watched two “smart” agents debate politely while your cloud bill ticks like a taxi meter, welcome to my Tuesday. After 15 years shipping .NET systems, I’ve learned the bottleneck isn’t model IQ – it’s choreography: clear roles, controlled context, and provable trust between processes. A recent incident with our research bot and summarizer forced me to harden the stack: short‑lived A2A tokens + mTLS, explicit budgets, loop guards, and end‑to‑end telemetry. The result? Fewer runaways, lower costs, and consistent outputs. In this post I’ll walk you through the playbook I now use: Semantic Kernel (SK) for multi‑agent coordination, a practical Agent‑to‑Agent (A2A) handshake, and a drop‑in research→summary pipeline you can paste into your .NET repo today. If one smart agent gives you a demo, two that cooperate safely give you a product.

The Rise of Agentic Systems (2025)

Why this matters now:

- Models plateau, systems scale. Incremental model upgrades help; orchestration multiplies value.

- Specialization beats generalization. A focused agent with curated tools often outperforms a generalist.

- Compliance & cost control. Orchestration encodes policy (PII handling, rate limits) and budget (token, time, retries).

Agent platforms exploded this year, but if you’re in .NET land, Semantic Kernel gives you a pragmatic, strongly‑typed way to compose skills, memory, and planners – without hiding the wires. Let’s use that to build something real.

Remote Agents & A2A: Architecture and Security Handshake

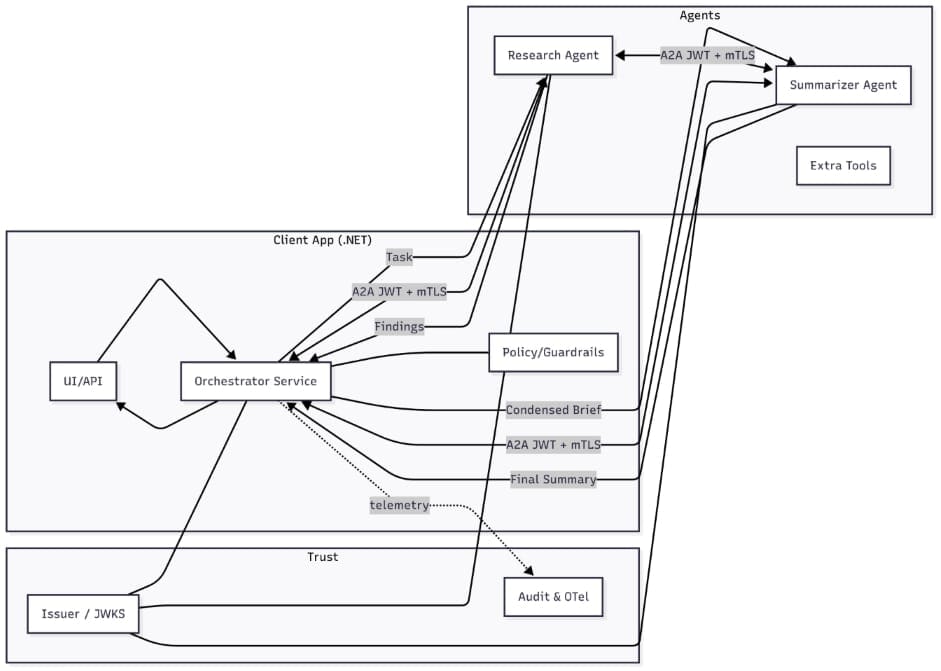

We’ll separate concerns into three layers:

- Agents – autonomous workers with a clearly defined role, tools (skills/plugins), and input/output contracts.

- Orchestrator – the conductor: routes tasks, sets goals, enforces budgets, and applies safety policies.

- Transport & Trust – how agents talk (HTTP/gRPC/queues) and prove who they are (A2A handshake).

Reference Architecture (bird’s‑eye)

A2A Handshake (practical recipe)

For remote agents (different processes or hosts) use a short‑lived, audience‑scoped token with mutual trust.

- Discovery:

GET /.well-known/agentreturns agent metadata (ID, capabilities, JWKS URL, required scopes). - Nonce challenge: caller requests

POST /handshake/start→ receives{ nonce, expiresAt }. - Proof: caller signs the nonce with its private key and posts

POST /handshake/finishwith a signed JWT (subject=caller, audience=callee,nonceclaim). - Grant: callee verifies signature & claims, then issues a short‑lived access token (1–5 minutes) with minimal scopes.

- Session: all subsequent calls include

Authorization: Bearer <access-token>; optionally pin with mTLS.

Minimal ASP.NET Core handshake (server side)

using System.IdentityModel.Tokens.Jwt;

using System.Security.Claims;

using System.Security.Cryptography;

using Microsoft.AspNetCore.Mvc;

using Microsoft.IdentityModel.Tokens;

var builder = WebApplication.CreateBuilder(args);

var app = builder.Build();

// Ephemeral ECDSA key used to sign short-lived access tokens

using var signing = ECDsa.Create(ECCurve.NamedCurves.nistP256);

var signingKey = new ECDsaSecurityKey(signing) { KeyId = Guid.NewGuid().ToString("N") };

var nonces = new ConcurrentDictionary<string, DateTimeOffset>();

app.MapGet("/.well-known/agent", () => new

{

id = "agent:research",

capabilities = new[] { "web.search", "html.extract" },

jwks = "https://issuer.example.com/.well-known/jwks.json",

requiredScopes = new[] { "task.read", "task.write" }

});

app.MapPost("/handshake/start", () =>

{

var nonce = Convert.ToBase64String(RandomNumberGenerator.GetBytes(32));

nonces[nonce] = DateTimeOffset.UtcNow.AddMinutes(2);

return Results.Ok(new { nonce, expiresAt = nonces[nonce] });

});

record FinishReq(string Jwt);

app.MapPost("/handshake/finish", ([FromBody] FinishReq req) =>

{

var handler = new JwtSecurityTokenHandler();

var token = handler.ReadJwtToken(req.Jwt);

// Basic checks: nonce exists & is fresh

var nonce = token.Payload.TryGetValue("nonce", out var v) ? v?.ToString() : null;

if (nonce is null || !nonces.TryGetValue(nonce, out var exp) || exp < DateTimeOffset.UtcNow)

return Results.Unauthorized();

// Verify signature using caller's public key (omitted: fetch JWKS by 'iss'/'kid')

// Assume it's valid for the example

// Issue short-lived access token for this session

var access = new JwtSecurityToken(

issuer: "https://research-agent.example.com",

audience: token.Subject,

claims: new[] { new Claim("scope", "task.read task.write") },

notBefore: DateTime.UtcNow,

expires: DateTime.UtcNow.AddMinutes(3),

signingCredentials: new SigningCredentials(signingKey, SecurityAlgorithms.EcdsaSha256)

);

var accessJwt = handler.WriteToken(access);

return Results.Ok(new { access_token = accessJwt, token_type = "Bearer", expires_in = 180 });

});

app.Run();Notes

- Use issuer‑pinned JWKS for verification, rotate keys, and keep access tokens short.

- Prefer mTLS between trusted services; use JWT for scoped delegation.

- Include budget claims (max tokens, max turns, deadline) to let receivers enforce cost caps.

Designing the Orchestrator: Roles, Channels, Guardrails

An orchestrator is a traffic cop + concierge. It should:

- Assign roles: who handles which sub‑task.

- Manage context: provide the right memory/tools to the right agent.

- Route messages: direct, broadcast, or mediated.

- Enforce limits: max turns, timeouts, token/cost budgets.

- Observe: structured logs, traces, and per‑agent metrics.

Role Assignment

Keep roles explicit and stable. Define them as code, not as vibes in prompts:

public enum AgentRole { Researcher, Summarizer }

public record AgentSpec(

AgentRole Role,

string Endpoint,

string[] Tools,

string[] Scopes,

TimeSpan MaxTime,

int MaxTurns

);Communication Channels

Pick one primary channel. In practice:

- HTTP/gRPC for request/response.

- Queue/Bus (Azure Service Bus, RabbitMQ) for fan‑out and resilience.

- In‑proc Channels for fast local agent swarms.

A minimal orchestrator using System.Threading.Channels works great for local multi‑agent pipelines.

public record AgentMessage(string From, string To, string Kind, string Text, IDictionary<string,object>? Meta=null);

public interface IAgent

{

string Id { get; }

AgentRole Role { get; }

Task<IEnumerable<AgentMessage>> HandleAsync(AgentMessage input, CancellationToken ct);

}

public class Orchestrator

{

private readonly IReadOnlyDictionary<AgentRole, IAgent> _agents;

private readonly Channel<AgentMessage> _bus = Channel.CreateUnbounded<AgentMessage>();

public Orchestrator(IEnumerable<IAgent> agents)

=> _agents = agents.ToDictionary(a => a.Role);

public async Task<AgentMessage> RunAsync(string goal, CancellationToken ct)

{

var start = new AgentMessage("user", "research", "goal", goal);

await _bus.Writer.WriteAsync(start, ct);

var turns = 0;

while (await _bus.Reader.WaitToReadAsync(ct))

{

while (_bus.Reader.TryRead(out var msg))

{

if (++turns > 12) throw new InvalidOperationException("Loop guard tripped");

if (msg.To == "final") return msg; // terminal

var target = msg.To switch

{

"research" => AgentRole.Researcher,

"summarize" => AgentRole.Summarizer,

_ => throw new InvalidOperationException($"Unknown target {msg.To}")

};

var agent = _agents[target];

var outs = await agent.HandleAsync(msg, ct);

foreach (var o in outs) await _bus.Writer.WriteAsync(o, ct);

}

}

throw new InvalidOperationException("Empty run");

}

}Guardrails & Budgets

Add max turns, deadline, and cost envelope. Budget can live in message metadata and be decremented by agents. If you use SK tools, also enforce token limits per call.

public static class Budget

{

public static void Decrement(IDictionary<string,object> meta, string key, int amount)

{

if (!meta.TryGetValue(key, out var v)) throw new InvalidOperationException($"Missing budget {key}");

var remaining = Convert.ToInt32(v) - amount;

if (remaining < 0) throw new InvalidOperationException($"Budget exceeded: {key}");

meta[key] = remaining;

}

}Hands‑On: Build a Research‑and‑Summarize Pipeline with Two Agents

We’ll implement two cooperating agents using Semantic Kernel:

- Researcher: takes a topic, performs web search & extraction (stubbed here), outputs a concise brief.

- Summarizer: consumes the brief and produces an executive summary with bullet highlights.

Setup Semantic Kernel in .NET

# Install packages

dotnet add package Microsoft.SemanticKernel

dotnet add package Microsoft.SemanticKernel.Plugins.Core

# If you use Azure OpenAI / OpenAI connectors add their packages toousing Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

using Microsoft.SemanticKernel.Connectors.OpenAI;

var builder = Kernel.CreateBuilder();

// Choose your provider

builder.AddOpenAIChatCompletion("gpt-4o", Environment.GetEnvironmentVariable("OPENAI_API_KEY"));

// Or: builder.AddAzureOpenAIChatCompletion(deploymentName, endpoint, key);

var kernel = builder.Build();Implement the Researcher Agent

We’ll keep “web search” as a replaceable function to avoid coupling to a vendor. In production you would call Bing/WebSearch API + readability extraction.

public class ResearcherAgent : IAgent

{

private readonly Kernel _kernel;

public string Id => "agent:research";

public AgentRole Role => AgentRole.Researcher;

public ResearcherAgent(Kernel kernel) => _kernel = kernel;

private static Task<string[]> SearchAsync(string query, CancellationToken ct)

{

// TODO: Replace with real web search. Stubbing deterministic outputs is great for tests.

string[] docs =

{

$"{query}: recent study shows multi-agent systems boost task completion by orchestrating roles.",

$"{query}: design patterns emphasize budgets, guardrails, and context windows.",

$"{query}: A2A uses JWT + mTLS + budget claims to control cost and permissions."

};

return Task.FromResult(docs);

}

public async Task<IEnumerable<AgentMessage>> HandleAsync(AgentMessage input, CancellationToken ct)

{

if (input.Kind == "goal")

{

var hits = await SearchAsync(input.Text, ct);

// Condense to a brief using the model

var chat = _kernel.GetRequiredService<IChatCompletionService>();

var sys = "You are a focused research assistant. Produce a concise, source-agnostic brief (<= 200 words)";

var msg = ChatMessageContent.CreateSystemMessage(sys);

var user = ChatMessageContent.CreateUserMessage(string.Join("\n", hits));

var brief = await chat.GetChatMessageContentAsync([msg, user], kernel: _kernel, cancellationToken: ct);

return new[]

{

new AgentMessage(Id, "summarize", "brief", brief.Content ?? string.Empty)

{ Meta = new Dictionary<string,object>{{"tokens", 600}} }

};

}

return Array.Empty<AgentMessage>();

}

}Implement the Summarizer Agent

public class SummarizerAgent : IAgent

{

private readonly Kernel _kernel;

public string Id => "agent:summary";

public AgentRole Role => AgentRole.Summarizer;

public SummarizerAgent(Kernel kernel) => _kernel = kernel;

public async Task<IEnumerable<AgentMessage>> HandleAsync(AgentMessage input, CancellationToken ct)

{

if (input.Kind != "brief") return Array.Empty<AgentMessage>();

var chat = _kernel.GetRequiredService<IChatCompletionService>();

var sys = "Summarize the brief into: TL;DR (3 bullets), Risks (2 bullets), Next actions (3 bullets).";

var reply = await chat.GetChatMessageContentAsync([

ChatMessageContent.CreateSystemMessage(sys),

ChatMessageContent.CreateUserMessage(input.Text)

], kernel: _kernel, cancellationToken: ct);

var final = $"# Executive Summary\n{reply.Content}";

return new[] { new AgentMessage(Id, "final", "done", final) };

}

}Wire the Orchestrator and Run

// Composition root

var research = new ResearcherAgent(kernel);

var summary = new SummarizerAgent(kernel);

var orch = new Orchestrator(new IAgent[] { research, summary });

var cts = new CancellationTokenSource(TimeSpan.FromSeconds(30));

var result = await orch.RunAsync("multi-agent orchestration best practices", cts.Token);

Console.WriteLine(result.Text);You now have a tiny pipeline that exercises role assignment, message routing, guardrails (max turns), and model use via SK.

Add Observability (logs, traces, costs)

- Structured logs per turn:

From,To,Kind, tokens, duration, cost. - OpenTelemetry: add spans for each agent call and A2A handshake.

- Cost envelope: track prompt+completion tokens; stop when budget hits zero.

public static class Telemetry

{

public static IDisposable TraceScope(string name)

{

var sw = Stopwatch.StartNew();

return new DisposableAction(() => Console.WriteLine($"{name} took {sw.ElapsedMilliseconds}ms"));

}

private sealed class DisposableAction : IDisposable

{

private readonly Action _onDispose; public DisposableAction(Action a) => _onDispose = a;

public void Dispose() => _onDispose();

}

}Use using (Telemetry.TraceScope($"agent:{agent.Id}") ) { ... } inside HandleAsync and emit token counters after each model call.

Debugging Agent Conversations (that actually works)

When agents misbehave, it’s usually context, tools, or looping.

- Record every turn as a typed struct with:

prompt,tool-calls,outputs,tokens,cost,latency,traceId. - Deterministic test harness: stub external calls (as we did for search) and replay with fixed seeds.

- Prompt diffing: snapshot prompts and use a simple diff to see what changed across runs.

- Conversation probes: add a hidden instruction to ask the agent to explain why it chose a tool (chain‑of‑thought is not logged, but you can request rationales as structured outputs: reasons, confidence, alternatives).

- Single‑step mode: execute exactly one tool call then stop, so you can inspect state before continuing.

Example: turn record

public record Turn(

string From, string To, string Kind,

string Prompt, string Output,

int PromptTokens, int CompletionTokens,

double CostUsd, long Ms, string TraceId

);Store turns (SQLite/Seq/ELK). Add a simple viewer that groups by TraceId and renders the conversation timeline.

Runaway Loops: Best Practices

Runaway loops are the “while(true){}” of agent systems. Prevent, detect, and break them.

- Hard caps: max turns per task (e.g., 12), per‑agent max retries (e.g., 2), max wall‑clock time.

- Loop signature: hash last N messages; if it repeats, force a different strategy (e.g., escalate to reviewer agent).

- Budget claims: put

max_tokens,max_calls,deadlinein the A2A token; receivers must enforce. - Progress checks: require each turn to declare a delta (what changed). If none, terminate.

- Reflect & re‑plan: insert a reflector agent every K turns to summarize progress and either stop or pivot.

Snippet: loop detector

public static class LoopDetector

{

public static bool IsLooping(IReadOnlyList<AgentMessage> history, int window = 4)

{

if (history.Count < window * 2) return false;

string Sig(int offset) => string.Join("|", history.Skip(offset).Take(window).Select(m => m.Kind + ":" + m.Text[..Math.Min(40, m.Text.Length)]));

return Sig(history.Count - window) == Sig(history.Count - window*2);

}

}Production Checklist (before you ship)

- Secrets: managed identities / Key Vault; no keys in env on shared hosts.

- PII: redact before model calls; add policy filters (regex + classifiers).

- Rate limiting: per agent & per user; backoff with jitter.

- Retry policy: idempotent tool calls; exponential backoff; circuit breaker.

- Telemetry: OTel traces; per‑agent dashboards (latency, cost, success). Alerts on spikes.

- Versioning: pin models & prompts; include

schemaVersionin messages. - Tests: deterministic suites with stubbed tools; golden summaries.

- Budgets: enforce token/time/cost at orchestrator and agent.

- A2A: short‑lived JWT, mTLS, audience scoping, least privilege scopes.

FAQ: Debugging & Looping in Multi‑Agent SK

Log every turn with prompts, tool invocations, tokens, cost, and latency. Use trace IDs to stitch calls across microservices. Keep a single‑step mode to pause after each tool call for inspection. Stub external APIs to make failing runs replayable.

Enforce hard caps (turns/time), carry budget claims in A2A tokens, detect repeating signatures, and add a periodic reflector that either pivots or halts. Require each turn to report a progress delta.

Guardrails belong in code (budgets, PII, routing). Prompts should express role, goal, and format. Keep policy testable and enforceable outside the model.

Start with static roles + orchestrator logic (like this post). Add planning when tasks become long‑horizon or the set of tools grows large.

Track costs per turn and per task. Fail tasks that exceed budgets. Emit cost histograms per agent and dashboard them. Cheap mistakes in dev become expensive in prod.

Conclusion: From Prototype to Production Without Drama

You don’t need a 20‑agent circus to get value. Start with two specialized agents, add a thin orchestrator, wrap it in a secure A2A handshake, and ship. From there, layer in budgets, telemetry, and loop guards. If you follow the patterns in this post, you’ll move from “cool demo” to stable, observable, and compliant multi‑agent systems.

What’s the first duo you’ll try – research + summarizer, data wrangler + evaluator, or something spicier? Drop your plan (or roadblocks) in the comments – I’m happy to help you wire it up.