OpenAI’s models, particularly GPT-4, have set new standards in generating human-like text. C#, known for its powerful features and versatility, is a preferred language for many developers building a wide range of applications. By combining these two technologies, developers can create sophisticated, intelligent applications capable of understanding and generating natural language.

Understanding the Parameters

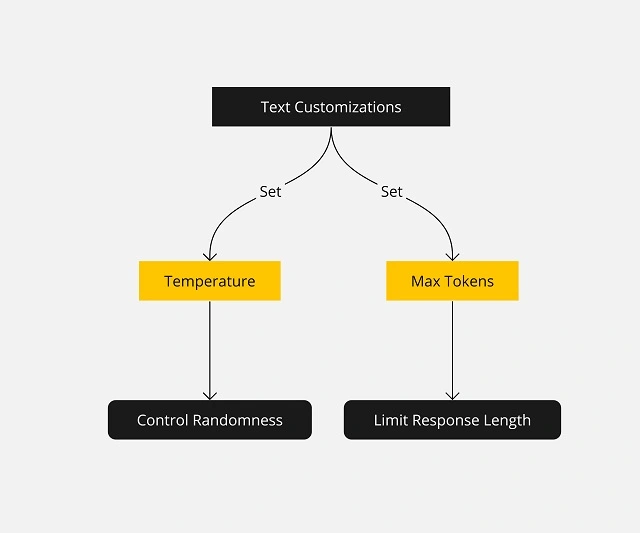

Using OpenAI’s GPT models in C# opens up a world of possibilities. To fully utilize this potential, it’s crucial to understand how to fine-tune the generated output. In this article, we’ll explore two key parameters: temperature and max_tokens. By the end, you’ll have a clear idea of how to effectively manage the randomness and length of AI-generated content.

Temperature

The temperature parameter is a floating-point number that dictates the randomness of the model’s output. It can be visualized as a dial for controlling the model’s creativity.

- Higher Values (e.g., 0.8 to 1.0) These values make the output more random and creative. The model is more likely to produce diverse and sometimes unexpected results. This can be useful when brainstorming or seeking innovative ideas.

- Lower Values (e.g., 0.2 to 0.5) Lower temperature settings result in more deterministic and focused outputs. The model becomes more conservative, often sticking to more common phrases and ideas.

Code Example:

using System.Net.Http;

using Newtonsoft.Json.Linq;

string prompt = "Describe the importance of code optimization.";

double temperatureSetting = 0.7; // Adjust this for desired randomness

HttpClient client = new HttpClient();

var content = new StringContent(

JsonConvert.SerializeObject(new { prompt = prompt, temperature = temperatureSetting }),

Encoding.UTF8, "application/json"

);

// Send the request and handle the response as needed...Max_Tokens

max_tokens defines the maximum length of the response in terms of tokens (a token can be as short as one character or as long as one word). It provides a direct way to control the verbosity of the model.

- Setting a Low Token Count: This can be used to get concise answers or summaries. For instance, if you only want a brief overview or a short answer to a query.

- Setting a High Token Count: Useful when seeking detailed explanations, long narratives, or comprehensive answers.

Code Example:

int tokenLimit = 150; // This will limit the response length

var content = new StringContent(

JsonConvert.SerializeObject(new { prompt = prompt, max_tokens = tokenLimit }),

Encoding.UTF8, "application/json"

);

// Send the request and handle the response as needed...Combining Temperature and Max_Tokens

While both parameters can be used independently, using them in tandem can be especially powerful. For instance, combining a high temperature with a low max_tokens can give you a burst of creative yet concise ideas. On the other hand, a low temperature combined with a high max_tokens might yield detailed, focused, and conservative explanations.

Code Example:

double combinedTemperature = 0.5;

int combinedTokenLimit = 100;

var content = new StringContent(

JsonConvert.SerializeObject(new { prompt = prompt, temperature = combinedTemperature, max_tokens = combinedTokenLimit }),

Encoding.UTF8, "application/json"

);

// Send the request and process the response...4. Best Practices

- Experiment and Iterate: The optimal settings for

temperatureandmax_tokenscan vary based on the application. It’s advisable to experiment and iterate to find the sweet spot. - Understand the Context: Always consider the context in which the AI’s response will be used. For user-facing applications where clarity is paramount, it might be best to err on the side of coherence (lower

temperature) and conciseness (moderatemax_tokens).

Context Matters: Personalizing OpenAI Conversations

Interactions with AI models are becoming increasingly dynamic. Beyond one-off questions, there’s a growing need for models to understand the context of a conversation. For developers working with OpenAI in C#, this means incorporating user context and retaining conversation history for more meaningful exchanges. In this article, we’ll explore how to achieve this.

1. Why Context Matters

Unlike traditional methods that treat each prompt as an isolated request, using context allows the model to understand previous interactions, making the conversation flow more naturally. Here’s why it is important:

Enhancing Understanding

- Human Conversations: Think of human interactions. Our responses aren’t just based on the last sentence spoken but the entire conversation, the mood, previous interactions, and sometimes even the environment. Similarly, when an AI system factors in context, it mimics this holistic understanding.

- Avoiding Repetition: Without context, AI might repeatedly ask the same questions or provide similar responses, leading to user frustration. Remembering past interactions prevents this.

Personalized Interactions

- Tailored Responses: By considering the user’s history and previous inputs, AI can craft responses that feel more personalized and tailored to the individual, enhancing user satisfaction.

- Building Relationships: Over time, as the system recalls past interactions, users might feel a sense of continuity, as if they’re interacting with an entity that ‘knows’ them.

Efficient Problem Solving

- Recall Past Solutions: If a user has had a similar query or problem before, recalling that interaction can lead to quicker solutions.

- Avoiding Misunderstandings: Context can provide clarity. For instance, the word “bank” can mean a financial institution or the side of a river. Knowing the conversation’s context can avoid potential confusions.

Enriched User Experience

- Smooth Conversations: Instead of starting every interaction from scratch, context allows for smooth transitions and continuations, making the conversation flow more naturally.

- Increased Trust: Users might trust the system more if they feel it ‘remembers’ and understands them, leading to increased usage and reliance.

Business Insights

- Analyzing Trends: For businesses, analyzing the context of user interactions can reveal trends, preferences, and areas of improvement.

- Improving Products and Services: Understanding the context behind user queries and feedback can provide insights into product enhancements or service adjustments.

2. Structuring the Conversation

Incorporating context means remembering past interactions. Instead of sending standalone prompts, the conversation with the OpenAI model can be structured as a series of back-and-forths, much like how humans converse. This approach helps the model maintain context throughout the interaction.

Concept of Messages

Conversations are typically structured as an array of messages. Each message in this array has two key components:

- Role: This can be “

system“, “user“, or “assistant“. While “user” and “assistant” are self-explanatory, “system” plays a special role, providing high-level instructions for the conversation. - Content: This is the actual text of the message from the respective role.

Understanding Roles in Structured Conversations

In the structured conversation format that OpenAI utilizes, roles are a foundational concept. They dictate who (or what) is ‘speaking’ or providing information at any given point in the conversation. Each role has a distinct purpose, and understanding these roles is pivotal for shaping the conversation’s flow and outcome.

The “user” Role

- Definition: Represents the end user or any external entity interacting with the model.

- Purpose: To pose questions, provide information, or give instructions to the AI model.

- Example Usage: Queries (“Tell me about quantum physics”), statements (“I love playing chess”), or specific requests (“Translate ‘Hello’ to Spanish”).

The “assistant” Role

- Definition: Represents the AI model’s responses.

- Purpose: To answer questions, provide information, or carry out tasks based on user inputs and system-level instructions.

- Example Usage: Responses to user queries (“Quantum physics is a branch of…”), reactions to statements (“That’s great! Chess is a strategic game.”), or fulfilling user requests (“‘Hello’ in Spanish is ‘Hola'”).

The “system” Role

- Definition: A unique role that provides high-level directives or context to guide the AI’s behavior throughout the conversation.

- Purpose: To set a specific tone, style, or context. System-level instructions aren’t direct questions or commands, but rather overarching guidelines that influence the assistant’s responses.

- Example Usage: Setting conversational tones (“You are a cheerful assistant”), thematic contexts (“You are a historian from the 18th century”), or specific behaviors (“Answer in a poetic format”).

Code Illustration of Roles:

List<Dictionary<string, string>> conversation = new List<Dictionary<string, string>>

{

new Dictionary<string, string> { {"role", "system"}, {"content", "You are a historian specializing in ancient Rome." } },

new Dictionary<string, string> { {"role", "user"}, {"content", "Tell me about Julius Caesar"} }

};

// As the conversation progresses, the assistant's response will be shaped by both the user's query and the system's instruction.Benefits of Utilizing Roles:

- Contextual Awareness: Roles help the model distinguish between different types of inputs, allowing it to provide contextually relevant responses.

- Flexibility: Developers can dynamically adjust conversations, switching between roles to guide the AI’s behavior or introduce new contexts.

- Consistency: System-level roles ensure that the AI maintains a consistent tone or style throughout a conversation, enhancing user experience.

3. Extending the Conversation

As the user interacts with the assistant, simply append messages to the conversation list, ensuring the model remains aware of the entire conversation history.

Code Example:

conversation.Add(new Dictionary<string, string> { {"role", "user"}, {"content", "What about its origin?" } });

// Serialize and send the updated conversation to OpenAI

var updatedContent = new StringContent(

JsonConvert.SerializeObject(new { messages = conversation }),

Encoding.UTF8, "application/json"

);

// Continue with the request and response handling...4. Leveraging System-Level Instructions

System instructions provide high-level directives to guide the model’s behavior throughout the conversation.

Code Example:

// Initialize the conversation list with a system message

var conversation = new List<Dictionary<string, string>>()

{

new Dictionary<string, string> { { "role", "system" }, { "content", "You are an assistant that speaks like Shakespeare." } }

};

// Serialize and send the conversation with the system instruction

var systemContent = new StringContent(

JsonConvert.SerializeObject(new { messages = conversation }),

Encoding.UTF8, "application/json"

);

// Process the request and observe the Shakespearean response!5. Considerations for Context Management

- Length Limitations: Be aware of the token limits. If a conversation becomes too long, you might need to truncate or omit parts of it.

- Relevance: Not all previous messages are equally relevant. Periodically assess the conversation history and retain only the messages that provide necessary context.

Getting Started with OpenAI GPT Instructions in C#

OpenAI’s models, with their vast knowledge and flexibility, can be tailored to specific conversational behaviors using system-level instructions. For C# developers looking to achieve a certain tone, style, or context in their AI-driven applications, these instructions are indispensable. In this article, we’ll explore how to effectively use system-level instructions and illustrate this with C# code snippets.

1. Precision in Responses

- Focused Outputs: System-level instructions can guide the AI to produce outputs that are more aligned with a specific theme or style.

using OpenAI_API.Chat;

OpenAIAPI api = new OpenAIAPI("OPENAI_API_KEY");

var chatMessages = new List<ChatMessage>()

{

new ChatMessage(ChatMessageRole.System, "Provide factual and concise information."),

new ChatMessage(ChatMessageRole.User, "Write about space exploration.")

};

// Use the API to generate a focused response on space exploration

var focusedResponse = await api.Chat.CreateChatCompletionAsync(chatMessages);- Filtering Noise: System-level instructions can help eliminate potential irrelevant details or verbosity from the AI’s responses.

using OpenAI_API.Chat;

// Define a list of chat messages with specific roles and instructions

var chatMessages = new List<ChatMessage>()

{

new ChatMessage(ChatMessageRole.System, "Limit the response to three sentences."),

new ChatMessage(ChatMessageRole.User, "Tell me about the solar system.")

};

// Use the API to generate a concise response about the solar system

var conciseResponse = await api.Chat.CreateChatCompletionAsync(chatMessages);2. Control Over AI Behavior

- Safety: System-level instructions can prevent the generation of inappropriate or potentially harmful content.

using OpenAI_API.Chat;

// Define a list of chat messages with specific roles and instructions

var chatMessages = new List<ChatMessage>()

{

new ChatMessage(ChatMessageRole.System, "Avoid violent or offensive themes."),

new ChatMessage(ChatMessageRole.User, "Write a story about a disagreement.")

};

// Use the API to generate a story about a disagreement with the specified guidelines

var safeResponse = await api.Chat.CreateChatCompletionAsync(chatMessages);- Adherence to Guidelines: For applications with specific standards, system-level instructions ensure the AI’s responses are compliant.

using OpenAI_API.Chat;

// Define a list of chat messages with specific roles and instructions

var chatMessages = new List<ChatMessage>()

{

new ChatMessage(ChatMessageRole.System, "Provide general advice for headaches."),

new ChatMessage(ChatMessageRole.User, "Stick to widely accepted medical guidelines.")

};

// Use the API to generate medical advice based on the chat messages

var medicalAdvice = await api.Chat.CreateChatCompletionAsync(chatMessages);3. Adaptability to Diverse Applications

- Flexibility: System-level instructions allow the same AI model to be tailored for a range of applications.

using OpenAI_API.Chat;

// Define a list of chat messages with specific roles and instructions

var chatMessages = new List<ChatMessage>()

{

new ChatMessage(ChatMessageRole.System, "Use a friendly and casual tone."),

new ChatMessage(ChatMessageRole.User, "Greet the user.")

};

// Use the API to generate a friendly and casual greeting

var chatbotGreeting = await api.Chat.CreateChatCompletionAsync(chatMessages);- Customization: Tailor the AI’s behavior to align with brand voice or specific requirements.

using OpenAI_API.Chat;

// Define a list of chat messages with specific roles and instructions

var chatMessages = new List<ChatMessage>()

{

new ChatMessage(ChatMessageRole.System, "Use the company's formal and enthusiastic tone."),

new ChatMessage(ChatMessageRole.User, "Describe our new product.")

};

// Use the API to generate a brand-consistent response based on the chat messages

var brandResponse = await api.Chat.CreateChatCompletionAsync(chatMessages);4. Enhanced User Experience

- Consistency: Instructions can ensure the AI provides uniform responses across interactions.

using OpenAI_API.Chat;

// Define a list of chat messages with specific roles and instructions

var chatMessages = new List<ChatMessage>()

{

new ChatMessage(ChatMessageRole.System, "Always suggest titles from the 21st century."),

new ChatMessage(ChatMessageRole.User, "Recommend a book.")

};

// Use the API to generate a consistent reply based on the chat messages

var consistentReply = await api.Chat.CreateChatCompletionAsync(chatMessages);- Relevance: Guiding the AI ensures outputs align with user queries.

using OpenAI_API.Chat;

// Define a list of chat messages with roles and content

var chatMessages = new List<ChatMessage>()

{

new ChatMessage(ChatMessageRole.System, "Focus on the basics suitable for a middle school student."),

new ChatMessage(ChatMessageRole.User, "How does photosynthesis work?")

};

// Create a chat completion using the API and get the relevant answer

var relevantAnswer = await api.Chat.CreateChatCompletionAsync(chatMessages);5. Efficient Model Training

- Guided Learning: Real-time behavior guidance can be more efficient than extensive fine-tuning.

using OpenAI_API.Chat;

// Define a list of chat messages with specific roles and instructions

var chatMessages = new List<ChatMessage>()

{

new ChatMessage(ChatMessageRole.System, "Draw parallels to scientific theories but keep it simple."),

new ChatMessage(ChatMessageRole.User, "Discuss the concept of time.")

};

// Use the API to generate a guided response based on the chat messages

var guidedResponse = await api.Chat.CreateChatCompletionAsync(chatMessages);There you have it! OpenAI GPT is powerful tool. And with the right instructions, you’re in control. Whether you’re making a chat helper, a writing app, or any tool that talks back, use these tips to get OpenAI GPT to do what you want in C#.

In the realm of AI text generation, OpenAI’s GPT stands out as a significant advancement. It’s not just about its capabilities but also about how you can fine-tune its settings to achieve desired results. By understanding how to adjust these settings, developers can create responses that are both accurate and tailored to specific needs. As we delve deeper into AI, mastering these adjustments will be crucial for crafting effective and meaningful interactions. With OpenAI GPT, we’re not just generating text; we’re creating rich, customized experiences.

Happy customizing and generating text! 🙂

Frequently Asked Questions (FAQ)

OpenAI GPT is a cutting-edge language model created by OpenAI. It is designed to comprehend and generate human-like text based on the input it receives.

You can adjust the temperature parameter. A higher value (e.g., 0.9) encourages more random and creative outputs, while a lower value (e.g., 0.2) results in more deterministic and focused responses.

Yes, using the max_tokens parameter, you can set a limit on the response length, ensuring concise or detailed outputs as per your needs.

Prompts guide the initial direction of the AI’s response, while system-level instructions offer high-level directives to shape its behavior and outputs. They’re crucial for tailoring the AI’s response to specific requirements.

Absolutely! By leveraging user feedback and iterative prompting, you can continually refine and optimize the generated text to perfection.

Not at all. While OpenAI GPT is incredibly creative, with the right instructions and parameters, it can be directed to produce highly specific, relevant, and tailored content for a wide range of applications.

You can access OpenAI GPT through the OpenAI API. There are several SDKs and libraries, including those for C#, to help integrate it into your applications.

This is a great post! I’m a .Net developer and I’ve been looking for a way to get more out of OpenAI GPT. This post is a great starting point.